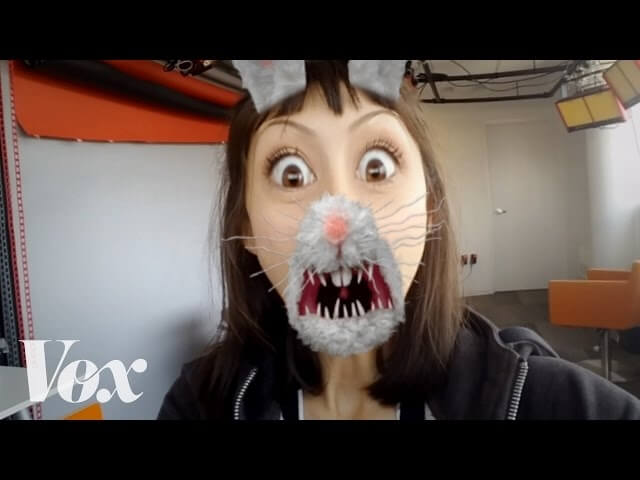

There’s some impressive technology behind ridiculous Snapchat filters

Snapchat calls them “lenses.” Its users call them “filters.” But everyone knows what these applications do: They add flower crowns, bunny ears, horn-rimmed glasses, virtual makeup, and other accessories to images of people’s faces in real time. But how on earth does this technology work, where did it come from, and what does it ultimately all mean to humanity? These are among the questions raised and answered by “How Snapchat’s Filters Work,” a new episode of Vox’s informative web series Observatory by Joss Fong and Dion Lee. As Fong learns, the story behind Snapchat’s filters is partially obscured in mystery, due to the machinations of corporate politics. But some facts can be clearly established. The engineering technology behind these “augmented reality” filters comes from a company called Looksery, acquired by Snapchat for a cool $150 million last September. “Are virtual bunny ears really worth $150 million to anyone?” a cynic might ask. The answer is yes, considering the potential applications of this technology. Augmented reality might be the next frontier of advertising. Snapchat wants in on the ground floor.

Snapchat’s filters are an example of a fast-rising field called computer vision. “Those are applications that use pixel data from a camera in order to identify objects and interpret 3-D space,” Fong explains. In this case, the three-dimensional “objects” being “interpreted” are people’s faces. Computers have gotten frighteningly good at recognizing people’s faces in the last few years, at least when people face forward toward the camera lens. It boils down to recognizing contrasts between dark and light parts of an image and then feeding that information through a facial detection algorithm. As for recognizing and mapping facial features, that process has involved the creation of a statistical model of a face shape, based on hundreds or even thousands of photos. A computer can now match the data it receives from a camera to its own pre-programmed paradigm for a human face. And now a virtual “doggy nose” can be applied to that human face. Yay for technology, right?

[via Laughing Squid]